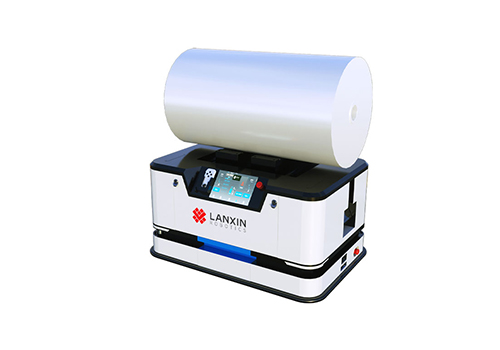

VMR-FR3300L

Recently, Lanxin, a leader in artificial intelligence and robotics, achieved a significant milestone, as the Zhejiang Provincial Department of Science and Technology officially awarded the company the Science and Technology Achievement Registration Certificate (Registration No.: DJ101002024Y0420). This prestigious recognition highlights the company's pioneering research in AI and robotic technologies, affirming the value and impact of their innovative work in these cutting-edge areas.

Summary of the Recognized Technological Achievement: "3D Vision Perception and Cluster Intelligent Applications for Mobile Robots in Ultra-Large Complex Scenarios"

Mission and Significance

As the application of industrial mobile robots deepens, the operational environments are becoming increasingly large and complex, with higher precision requirements. The number of mobile robots needed for coordination in a single site continues to grow with the scale of business operations. Ultra-large complex scenarios present new challenges for mobile robots: within these expansive and highly dynamic environments, large-scale robot coordination is required, while the robots must perform precise operations on complex targets such as reflective or small-sized objects. Therefore, establishing a comprehensive technical framework for precise operations of mobile robots in ultra-large, complex scenarios and achieving industrial-grade application is crucial for gaining a competitive edge in the global industry.

The AMR (Autonomous Mobile Robot) technology field is extensive and complex. Current domestic and international advancements in sensor technology, navigation, and robotic scheduling have not yet fully adapted to the precise operational requirements of robots in ultra-large complex environments. The core issue lies in the need to enhance robots' capabilities for comprehensive real-time perception and understanding of dynamic, complex environments and their own operations. This project centers on "3D Vision Fusion Perception + AI Large Model Technology" to build a complete technical system for semantic SLAM natural navigation, target object pose estimation, autonomous robotic arm trajectory planning, high-precision motion control, and large-scale mobile robot scheduling in complex environments. This system better adapts to diverse, customized, and large-scale manufacturing scenarios, broadening the application scope of AMRs and enhancing stability, safety, and precision.

Technical Challenges

With the expanding industrial applications of AMRs, personalized and flexible manufacturing is becoming the core driving force of future smart factories. The manufacturing industry is demanding higher complexity and precision, especially for mobile robots operating in ultra-large, highly dynamic complex environments. However, several technical bottlenecks remain:

In large-scale AMR operations, sensors must perceive environmental information from distances of 30 meters or more with a wide field-of-view and high resolution. However, few domestic sensors meet these long-range perception needs. Mainstream 3D vision sensors, both domestically and internationally, typically suffer from insufficient field-of-view and low resolution.

In recent years, mobile robots have primarily relied on laser-based SLAM (Simultaneous Localization and Mapping) technology for scene mapping and information acquisition. However, this approach faces significant challenges in spatial localization robustness and is also costly.

In practical operations, robots moving over large areas need to perform precise tasks on complex targets such as reflective or small objects. Robots often face recognition errors that affect operational precision and reliability. Furthermore, there is a lack of autonomous obstacle avoidance trajectory planning and high-precision motion control technology for robotic arms in complex environments.

The complexity of scheduling algorithms increases exponentially with the scale of mobile robots. Traditional methods, such as multi-map segmentation for route planning, are limited by the scheduling scale and single-map scheduling capabilities. Addressing data silos to achieve efficient coordination between "individual" and "group" intelligence, as well as between "mobile robots" and "docking stations," remains a pressing challenge for the industry.

Key Innovations

Developed a proprietary 3D vision sensor with long-range, wide-area, and high-resolution perception capabilities. A single sensor can achieve long-range positioning and navigation, mid-range obstacle avoidance, and close-range high-precision docking for mobile robots.

Utilizing the semantic association between 2D and 3D co-visual frames, the project constructs a dense SLAM system based on deep learning for industrial applications. By implementing techniques such as dynamic object filtering, static feature enhancement, and blur image motion estimation, the system enables markerless natural navigation for mobile robots in highly dynamic environments.

Developed a "feature-image" generative pose estimation method. Using a manifold description of the six-dimensional space, the project constructed a dual-modal decoder for pose mimicry and joint feature residuals to achieve precise recognition of complex targets. Additionally, the project established autonomous obstacle avoidance trajectory planning technology for robotic arms in complex environments, generating optimal operational trajectories. The project also introduced reinforcement learning motion control technology for robotic arms based on dynamics identification feedforward, achieving high-precision operational movement.

Implemented a distributed scheduling system using a conflict-free clustering approach, overcoming the limitations of scheduling scale posed by competitors' traditional methods such as multi-server expansion and multi-map segmentation. By focusing on task management and matching tasks according to different processes, and leveraging a graph convolutional neural network optimization algorithm, the project successfully achieved coordinated scheduling of thousands of mobile robots on a single map for the first time.

The evaluation committee concluded that this project systematically developed the core technologies and software-hardware systems for 3D vision perception and cluster intelligent applications for mobile robots in ultra-large, complex scenarios. The overall achievement is internationally advanced, with the 3D vision perception technology of mobile robots reaching a world-leading level.

Contact Us

Lanxin Technology, 7-802,

China Artificial Intelligence Town,

No.1818-2 Wenyi West Road,

Hangzhou, Zhejiang, China

marketing@lanxincn.com

Popular Products

Cases

Industry